Robots.txt in SEO Blogger Website

A robots.txt file is a plain text file that instructs search engine crawlers on which pages they can access on a website. It's placed at the root of the website, and is used to manage the activities of web crawlers.

Prevent overloading: Avoids overloading the site with requests from search engines

Control bandwidth: Reduces unnecessary crawler traffic, which helps control the site's bandwidth usage

Ensure relevant results: Helps keep search results clean and relevant for users

Hide resources: Exclude resources like images, videos, and PDFs from search results

Manage crawl budget: Ensures search engines are spending their time on the site efficiently.

Here are some things to keep in mind about robots.txt files:

Rules: A robots.txt file consists of one or more rules that block or allow access to specific file paths.

Default behavior: All files are implicitly allowed for crawling unless otherwise specified.

Allow directive: The Allow directive can be used to counteract a Disallow directive.

Regular expressions: The robots.txt standard doesn't support regular expressions or wildcards, but some search engines, like Google, do.

Yoast SEO: Yoast SEO can be used to create or edit a robots.txt file in the WordPress Dashboard.

To create robots.txt in SEO

Method 1

steps:

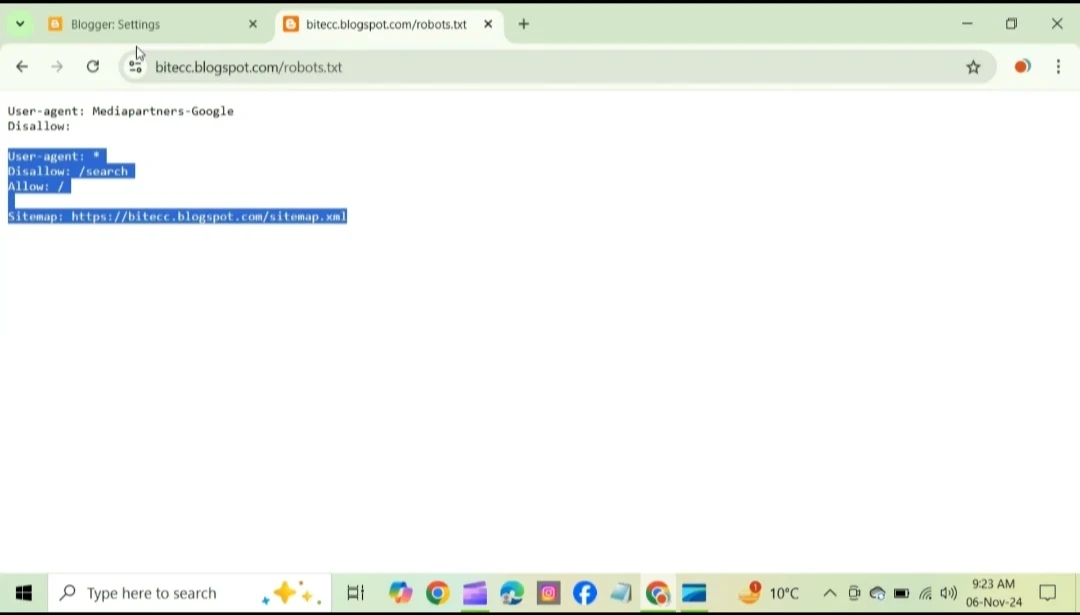

1. paste your website URL ending with robots.txt like https://bitecc.blogspot.com/robots.txt in google and search

2. copy the displayed text, go to settings and scroll down to crawlers and indexing, enable custom robots.txt ON then paste the copied text in custom robots.txt and press SAVE.

3. enable custom robots header tags ON

4. home page tags - keep all ON and noodp ON and SAVE

5. archive and search page tags - keep noindex ON and noodp ON and SAVE

6. post and page tags - keep all ON and noodp ON and SAVE

Detailed process

1. paste your website URL ending with robots.txt like https://bitecc.blogspot.com/robots.txt in google and search

2. copy the displayed text, go to settings and scroll down to crawlers and indexing, enable custom robots.txt ON then paste the copied text in custom robots.txt and press SAVE.

It is done.

Method 2

simple and easy

Copy the below text, change URL and paste in custom robots.txt and press SAVE as shown in above method 1

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://bitecc.blogspot.com/sitemap.xml

For detailed video click below 👇